If you read our blog, you’d know the dangers of sharing your personal information online. You might be using MySudo all-in-one privacy app to safeguard your personal email and phone number with secure alternatives, and be using MySudo’s private browsers and virtual cards—all packaged inside digital identities called Sudos. It’s innovative privacy technology. If you’re new to the idea, jump onboard.

But your email, phone number, credit card, and even your name, street address and SSN are only one layer of your personal data or personally identifiable information (PII) you need to protect. There’s another, hidden layer of personal data that only comes to light once you really dive deep into data privacy—and that’s residual data, also known as “behavioural surplus data” or ‘digital exhaust’. (Jump to the end of this article to learn how MySudo protects your residual data too.)

What is residual data?

On eps. 6 and 7 of our Privacy Files podcast, hosts Rich and Sarah explore the “shadow operation” of “residual data,” and quite frankly it will blow your mind.

“Residual data” or “behavioral surplus data” refers to the digital traces of information you leave behind on the internet without even knowing it. Think: the arrangement of your facial muscles in a photo on Facebook, or a misspelt word in a search term, or your typing speed. It is every single piece of information about you that can be gleaned from your every like, share, post, download, sign-up, search and so on. Yes, crazy, right?

And who is monitoring, gathering and manipulating this seemingly innocuous information? Big Tech, of course. Google, Facebook et al. are collecting the information, feeding it to sophisticated algorithms or “predictive models” and producing highly lucrative “predictive behaviour products” they sell to brands to drive advertising revenue.

What makes this residual data so profitable? Why do brands buy it? Because this data is so highly attuned to your digital habits that it can accurately predict your future behaviour—what you will buy—and therefore virtually guarantee advertising dollars for companies using it to personalize ads.

Residual data is a by-product of surveillance capitalism

The business model driving profit for Big Tech is called surveillance capitalism and, as Rich and Sarah explain on eps 6 and 7 of Privacy Files, residual data greases the wheels of surveillance capitalism. Let’s go through it.

What is surveillance capitalism?

Surveillance capitalism is a term first coined by Harvard Professor Emerita Shoshanna Zuboff, author of the masterwork, “The Age of Surveillance Capitalism.”

In the the ground-breaking VPRO documentary, The big data robbery: Shoshana Zuboff on surveillance capitalism, surveillance capitalism is called “ the dubious mechanisms of our digital economy” and Professor Zuboff explains these mechanisms are considered surveillance because they are “… operations that are engineered as undetectable, indecipherable, cloaked in rhetoric that aim to misdirect, obfuscate, and just downright bamboozle us all the time.”

Professor Zuboff says residual data is “our private and personal experiences … used as the raw material of extremely profitable digital products,” and “this is being conducted at a layer that is not accessible to us.”

Unsurprisingly, Google and Meta are the worst offenders: “Thanks to its navigation and search engine, Google knows where we are all the time, and what we think. Facebook knows our hobbies, preferences and friends because they glean so much information from the digital traces we leave behind unwittingly … spelling errors in search terms, colour buttons you prefer, type speed, driving speed … residual data,” Professor Zuboff says.

She gives the history: “Way back at the beginning … in 2001, 2002 … these data were considered just extra data … waste material … digital exhaust or data exhaust … but eventually it was understood that these waste materials harboured these rich predictive data.”

Professor Zuboff explains that, today, companies palm off this stealthy data collection as a means of improving their service, but in reality they are using most of it to train their predictive models and map patterns of human behaviour from hundreds of millions of people. “From these models, big tech can see how people with certain characteristics typically behave over time, which allows them to predict what you’re likely to do now, soon and later,” she says.

Shockingly, Professor Zuboff says the models even know what you’re going to feel like for dinner tonight.

Of course, you might say, but I like personalized ads … or, I have nothing to hide, so I don’t care what information they take, but Professor Zuboff says these perspectives are “profound misconceptions of what’s really going on.”

“We think that the only personal information they have about us is what we’ve given them, and we think we can exert some control over what we give them and therefore we think that our calculation or trade-off here is something that is somehow under our control; that we understand it. What’s really happening is that we provide personal information, but the information that we provide is the least important part of the information that they collect about us,” she says.

She reminds us we have no idea what today’s algorithms can predict about us—but it will include our personality traits, sexual orientation, and political persuasion—or where the information will end up. She cites cases of facial recognition data being sold to companies in China, for instance.

We don’t know about these processes, Professor Zuboff says, because they have been disguised: “They operate in stealth … Our ignorance is their bliss.”

Watch the VPRO documentary to find out more, including:

- Facebook’s contagion experiments using subliminal messaging

- How Pokémon Go was invented and incubated by Google based on a CIA start-up technology and the “lure modules” it uses to bring foot traffic into stores

- The risks with smart home devices like Google Nest.

And listen to eps. 6 and 7 of our Privacy Files podcast to hear Rich and Sarah’s take on residual data and surveillance capitalism.

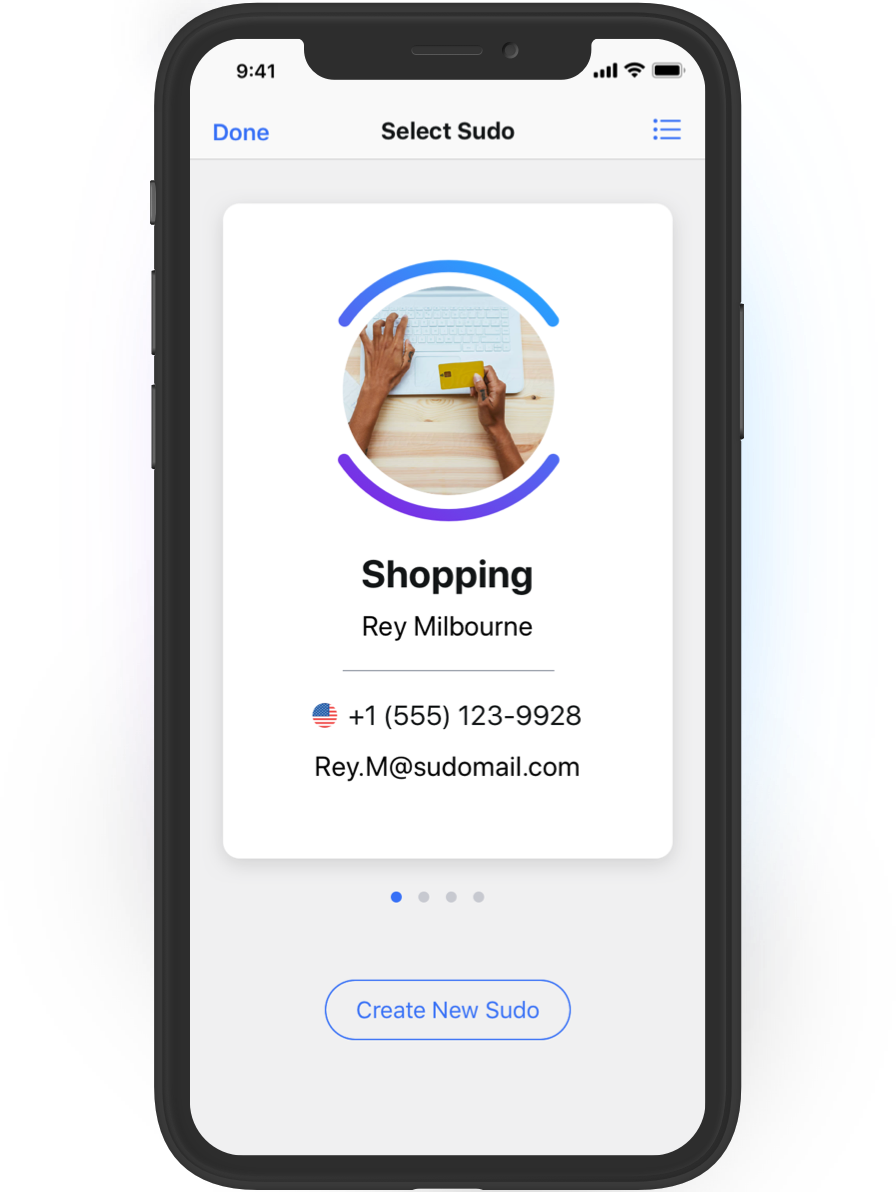

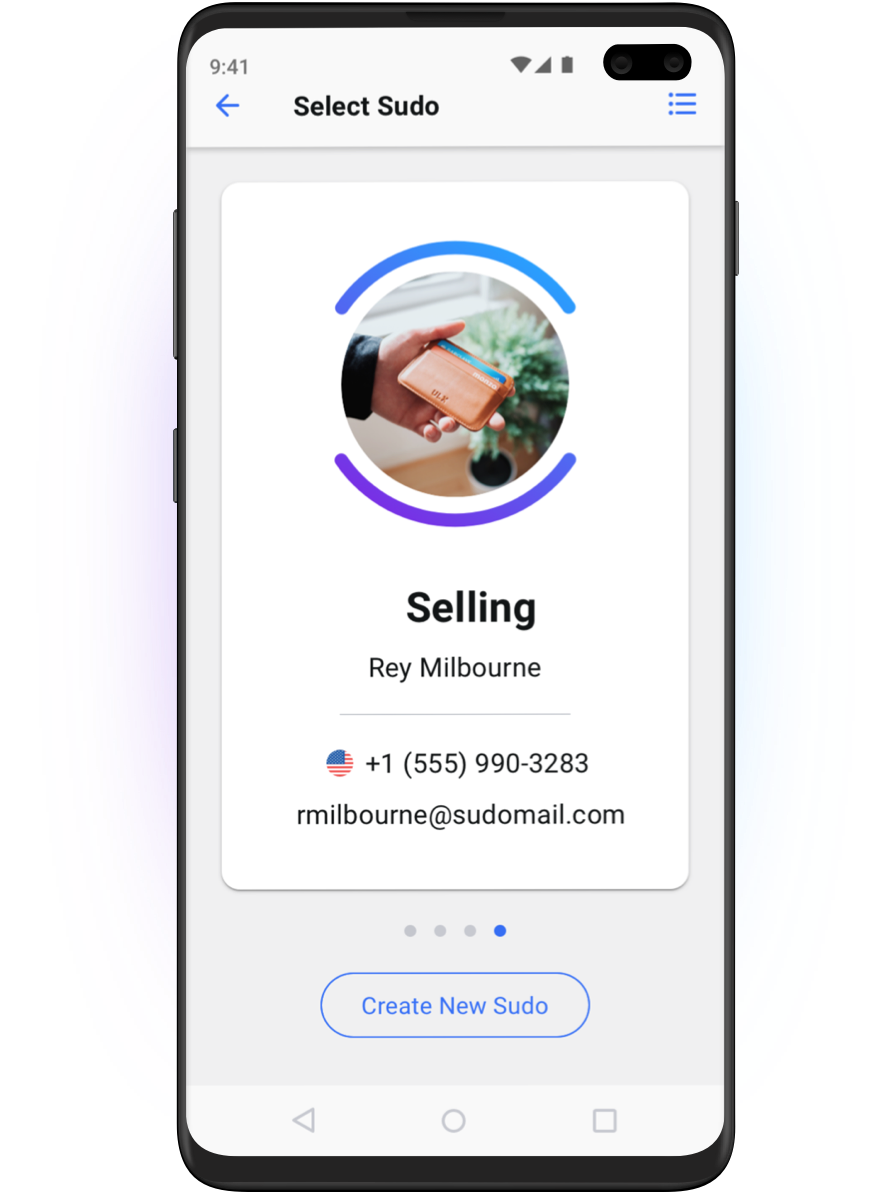

The key takeaway? Scammers want your name, email, address, phone number, credit card etc. Big tech wants your behavioural patterns. You can protect both with MySudo by:

- Using Sudo digital identities with in-built alternative email and phone numbers, browsers and virtual cards to safeguard your personal ones, AND

- Creating up to 9 different Sudos to compartmentalize and separate your online activity, to break your data trailand dilute your digital exhaust.

Tired of big tech spying on you? Go to MySudo.com.

You might also like:

14 Real-Life Examples of Personal Data You Definitely Want to Keep Private (and How to Do It)

The Rise of Surveillance Capitalism (Or, Whatever Happened to My Internet Free Will?)