ChatGPT is so hot right now and many of us didn’t even see it coming.

The generative artificial intelligence (AI) chatbot from Microsoft-backed Open AI has taken the world by storm and flustered the heck out of big tech.

ChatGPT is the fastest-growing consumer app of all time, hitting 100 million monthly active users after only 2 months. Snapchat took 3 years to reach that user milestone.

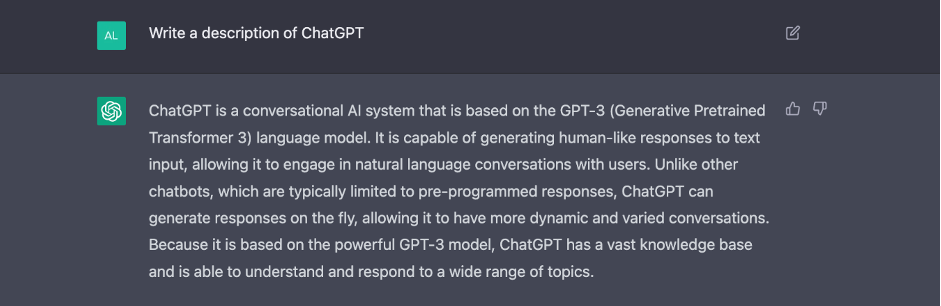

What is ChatGPT?

Let’s ask the chatbot itself:

A human at a leading European university put it this way:

“ChatGPT is a chatbot based on a Large Language Model (LLM). That means you can ask a question (prompt) and ChatGPT will write a text for it. This ranges from writing a limerick to writing scientific articles. To generate text, ChatGPT does not need to understand the prompt (and the answer). Instead, the prompt gives the chatbot a context within which it will use probability to see which words best line up, forming sentences. It generates new unique text and does not show existing texts (like ordinary search engines). It is therefore not possible to check the authenticity of texts with plagiarism software.”

So, ChatGPT is an AI chatbot that can take a written request from a human and create human-like responses, such as writing content and code. Open AI says it can answer questions and ask follow-up questions, admit mistakes, challenge incorrect premises and reject inappropriate requests (although they admit this isn’t foolproof).

ChatGPT is a big deal because it represents the first time a generative AI algorithm has introduced deep learning and pre-training on language data so it could generate more human responses and understand more context. It’s a bit like a customer service chatbot you’d see on a retail web site but infinitely more human-like, conversational, and useful.

ChatGPT is so good, some schools around the world have banned it for fear of cheating and plagiarism.

And while generative AI tools have limitations—they frequently get things wrong (just ask Google Bard!) for starters, it’s responses rely on the training data (for ChatGPT that’s “old” as 2021 and earlier), and human requests must be written in a way it’ll understand—use cases are emerging across every industry. That means the tech giants are falling over each other to be first with the goods in a market that’s expected to be worth US $30.5 billion in just five years.

Google is reportedly freaking out about hits to its search empire, even while it announces plans to introduce the technology into search. And while its answer to ChatGPT, Bard, has just debuted to embarrassing and financial consequences, Google says it has at least 20 more AI products in the works.

Microsoft has invested $10 billion in OpenAI, gaining a 46% stake in the company. It is currently deploying ChatGPT-like technology in its search engine Bing and Edge browser, and is expected to damage Google’s business with its offerings.

While quieter to date, Meta has generative AI as a key theme for 2023. Experts suggest Meta might use the technology more for business functions than Google and Microsoft, and it has the resources to outrun Alphabet and Microsoft in this race.

Overall, people seem torn between awestruck over ChatGPT’s capabilities and concerned about the threat it might pose to jobs and industries.

Learn everything there is to know about ChatGPT.

So, what about ChatGPT and privacy?

If this new technology learns from vast amounts of data on the internet, what does it mean for our personal information?

It means it’s a data privacy nightmare.

University of Sydney Professor, Uri Gal, writing about ChatGPT in a widely shared article for The Conversation, says: “If you’ve ever posted on the internet, you should be concerned … The problem is it’s fuelled by our personal data.”

Anonyome Labs’ Privacy Files podcast hosts Rich and Brian raised the same concerns in ep. 11. Demonstrating everything that’s both awesome and terrifying about generative AI, the episode opens with a spookily realistic ChatGPT-generated intro by Snoop Dog. Check it out. Rich and Brian then go on to ask the big question:

Where are the boundaries for ChatGPT?

Already we’re seeing at least 6 privacy red flags around ChatGPT and privacy:

- ChatGPT was trained on 300 billion words and 570GB of data from the internet: books, web texts, Wikipedia, articles, posts, web sites—including, commentators say, personal information obtained without consent. The success of ChatGPT and any successors like Google Bard comes down to data: the more it can analyse and assess, the more accurate it will be. But OpenAI is yet to define the privacy boundaries around its data use—and its privacy policy is considered “flimsy.”

- OpenAI didn’t ask permission to use our personal data, which means its use is outside its “contextual integrity” or original intended purpose—a potential regulatory issue.

- We can’t ask OpenAI to delete our personal information from the data model ChatGPT trains on, which raises more legal questions. There’s no “right to be forgotten” which is important since ChatGPT regularly returns inaccurate or misleading information.

- The data ChatGPT trained on contains proprietary and copyright material, such as books. But no-one is being compensated for their content: not even the famous authors among us. Remember, if you’re not paying for the product, you are the product, and soon you’ll have to pay and remain the product.

- Information we type into the chatbot’s request bar is recorded and used for future responses, potentially exposing sensitive information (think: medical, legal …).

- OpenAI collects user data like most other apps. According to that “flimsy” privacy policy that data includes IP address, browser type and settings, type of content the user is engaging with, features they’re using and actions they’re taking. And OpenAI shares the information with unspecified third parties without user consent.

Professor Uri Gal wraps it up nicely: “Its potential benefits notwithstanding, we must remember OpenAI is a private, for-profit company whose interests and commercial imperatives do not necessarily align with greater societal needs.

“The privacy risks that come attached to ChatGPT should sound a warning. And as consumers of a growing number of AI technologies, we should be extremely careful about what information we share with such tools.”

Protect yourself with MySudo

In this highly connected world, we need an armoury of privacy tools to stay safe online.

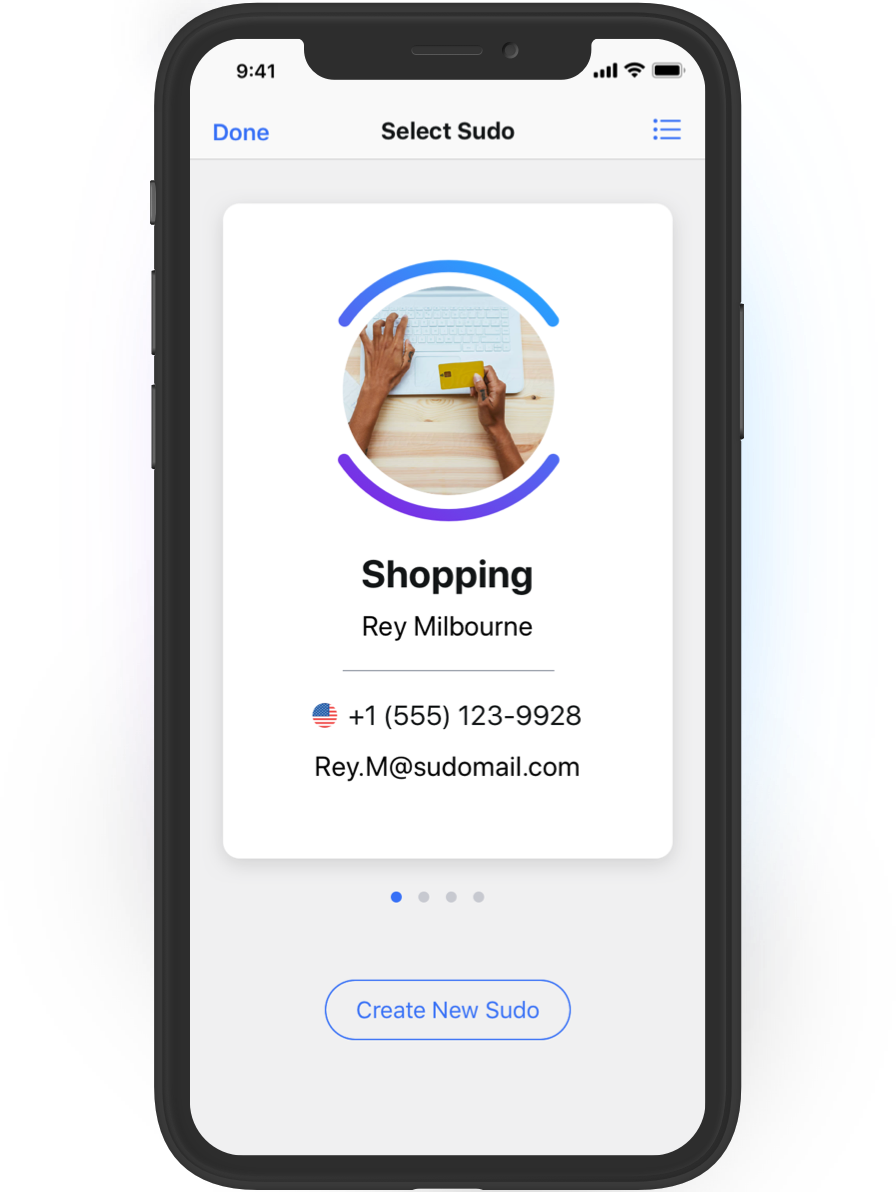

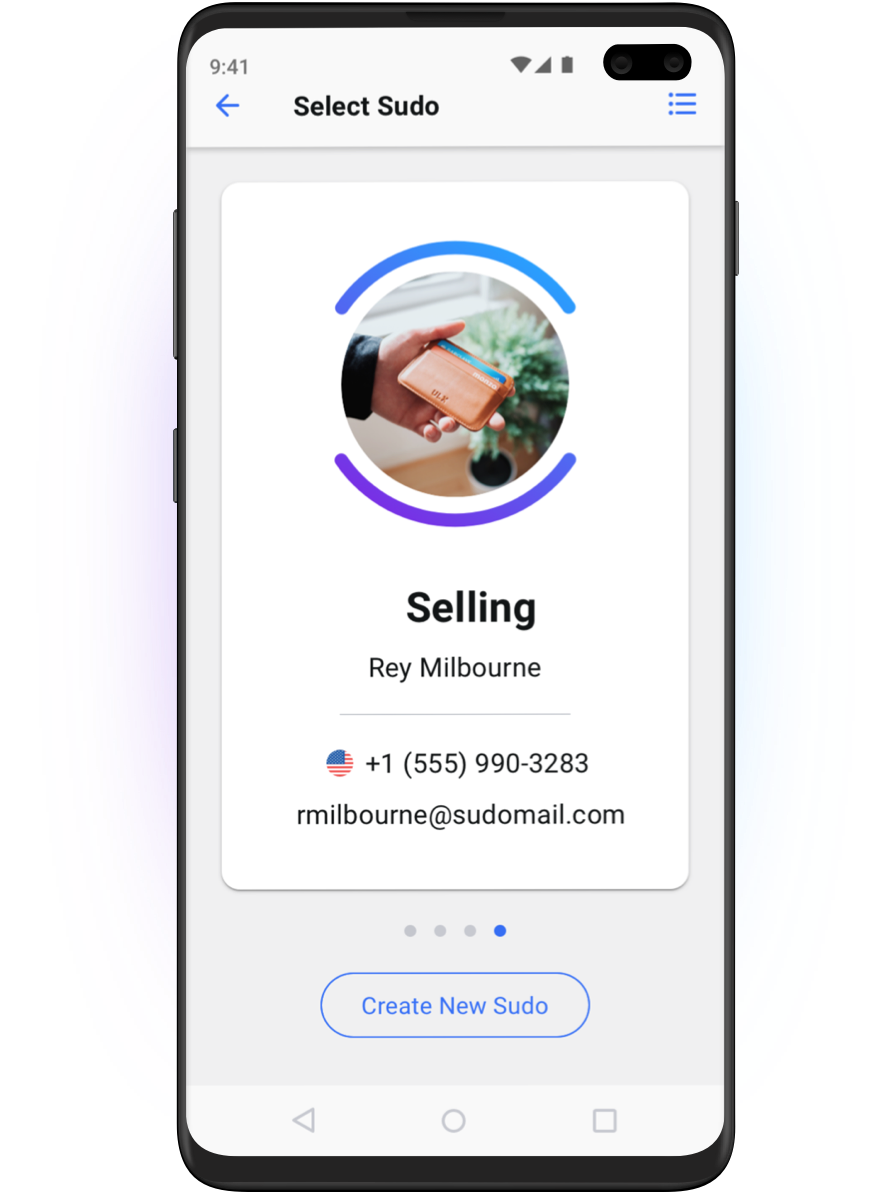

MySudo is the world’s only all-in-one privacy app, offering private and secure email, phone, browsers and virtual cards*, built into Sudo digital identities to shield your personal information and break your data trail.

MySudo’s superpower is compartmentalization, where you create up to 9 different Sudo digital identities to silo and separate your personal information.

Download MySudo today.

Listen to ep. 11 of the Privacy Files podcast for the full discussion on ChatGPT and privacy, then hit play on ep. 12, which explains exactly how to protect your privacy with MySudo.

*US and iOS only. Android and more locations coming soon